SOS Open Source Finally Goes Open!

(Author: Davide Galletti) As promised and announced about one year ago by its author Roberto Galoppini, finally SOS Open Source goes open source. Over the last year the eu-funded initiative PROSE project got SOS Open Source code base and docs, below you find more about what has been done to turn it in OSSEval.

Having worked both on the original version and the actual one, I am happy to share insights about what has been done beyond changing the name and choosing the license (AGPL).

Let’s start from the beginning, the state of the art. SOS Open Source was not updated in over 3 years, meaning that it was causing some troubles using its built-in search features because most search engines do change their APIs and search results every two years or even more frequently. Those changes resulted in partial results as well as bad-scraping, eventually making the SOS Open Source search feature more of a pain than everything else.

While at polishing the actual version, Bitergia and I have been talking about the possibility to write it again using a more modern programming-language, Python. Using the Django framework 1.6, basically I’ve been rewriting it from scratch, leaving its logic unaltered and keeping using MySQL as its database.

Also the graph generation has been improved, and it’s now using SVG.

The whole tool has been translated into English. Beyond rewriting, polishing and translating it, we actually took the opportunity to enhance the tool, somehow generalizing it.

What is New

Based on SOS Open Source methodology and practice, we added simple suggestions for improving every single metric. Based on past experiences we have been able to provide projects’ stakeholders with ideas to improve their projects’ marks, turning the assessment tool into a wizard returning tips and hints based on the actual marks.

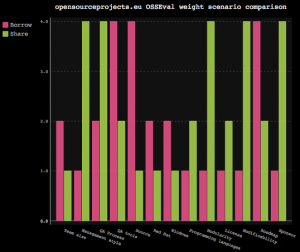

One of the most interesting new feature is the Weighted Profiles, enlisting two different target audiences: organization who either want to borrow or share open source software. The way it works it is pretty simple: every metric’s mark is multiplied by a given weight that changes depending on the weighted profile (see graph).

For example organizations interested in borrowing existing open source software will rely heavily on the availability of binaries or better VMs or cloud demos that would make super-easy to test it. On the other hand organizations willing to use and enhance an existing open source artifact would be interested in knowing more about how works the community backing that project.

For example organizations interested in borrowing existing open source software will rely heavily on the availability of binaries or better VMs or cloud demos that would make super-easy to test it. On the other hand organizations willing to use and enhance an existing open source artifact would be interested in knowing more about how works the community backing that project.

Last but not least, more flexibility has been added to the methodology Engine. Despite OSSEval has been designed to assess open source software, it can handle different entities with different methodologies, given full choice both for the type of questions and the associated depth in terms of queries.

As a result OSSEval maybe used to assess movies, directly retrieving some basic information from the IDMB or any other entity whose data is available via API (e.g. books, music).